In the field of Natural Language Processing (NLP), there are various techniques and models that have revolutionized the way machines understand and generate human language. One such breakthrough model is GPT, which stands for “Generative Pre-trained Transformer.” In this blog post, we will delve into the full form of GPT and explore its significance in NLP.

What is GPT?

GPT, or Generative Pre-trained Transformer, is an advanced deep learning model that has gained significant attention in the field of NLP. Developed by OpenAI, GPT utilizes a transformer architecture, which is a type of neural network model that has proven to be highly effective in processing sequential data.

The Full Form of GPT

The full form of GPT, as mentioned earlier, is “Generative Pre-trained Transformer.” Let’s break down each component of the acronym:

- Generative: GPT is a generative model, meaning it is capable of generating new content based on the patterns and information it has learned during training. This allows GPT to generate human-like text that is coherent and contextually relevant, making it a powerful tool for various applications such as language translation, content generation, and more.

- Pre-trained: GPT is pre-trained on a massive amount of data before being fine-tuned for specific tasks. During pre-training, the model learns from a large corpus of text data, such as books, articles, and websites, to develop a general understanding of language patterns and structures. This pre-training phase enables the model to capture a wide range of linguistic knowledge.

- Transformer: The transformer architecture is a key component of GPT. It was introduced in a seminal paper by Vaswani et al. in 2017. Transformers have emerged as a powerful alternative to traditional recurrent neural networks (RNNs) for sequential data processing tasks. They excel at capturing long-term dependencies in text and have become the backbone of many state-of-the-art NLP models.

Will ChatGPT replace programmers in the future? Discover the Potential Impact and Stay Ahead in the Tech World.

How Does GPT Work?

GPT works by leveraging a combination of unsupervised learning and fine-tuning techniques. Here’s an overview of the process:

- Pre-training: During pre-training, the GPT model learns to predict missing words within sentences or paragraphs. It does this by training on a large corpus of text data and utilizing a masked language modeling objective. By predicting missing words, the model develops an understanding of contextual relationships between words and captures various syntactic and semantic patterns.

- Fine-tuning: After pre-training, the model is fine-tuned on specific tasks using supervised learning. This involves training the model on task-specific labeled data to adapt it to perform well on specific downstream tasks, such as text classification, sentiment analysis, or question answering. Fine-tuning allows the model to transfer its learned knowledge from pre-training to specific real-world applications.

OpenAI, a leading artificial intelligence research laboratory, is the mastermind behind the creation of ChatGPT. OpenAI was founded in 2015 with a mission to ensure that artificial general intelligence (AGI) benefits all of humanity. The organization is renowned for its groundbreaking advancements in machine learning and natural language processing.

ChatGPT is the culmination of years of research and development by a team of brilliant scientists, engineers, and researchers at OpenAI. Leveraging their expertise in deep learning and neural networks, they have harnessed the power of language models to create an AI system that can engage in meaningful conversations.

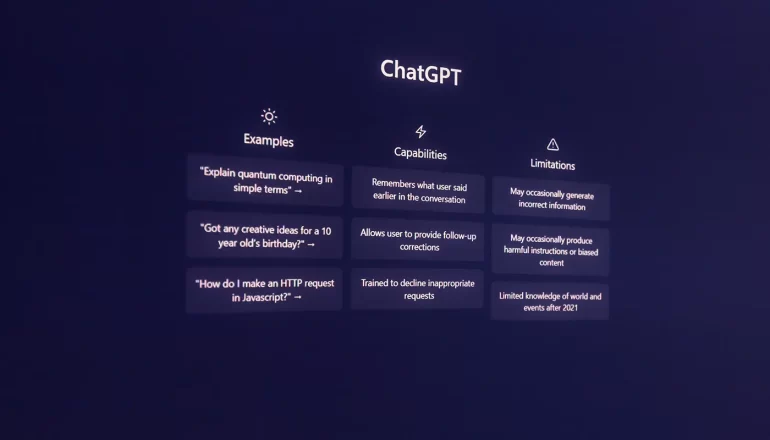

What can ChatGPT do?

ChatGPT is an incredibly versatile language model that can perform a wide range of tasks. Its ability to understand and generate human-like text makes it a powerful tool in various domains. Here are some of the tasks that ChatGPT can excel at:

- Content Generation: ChatGPT can generate high-quality content for blogs, articles, product descriptions, social media posts, and more. It can help businesses streamline their content creation process and produce engaging and informative material.

- Customer Support: With its conversational abilities, ChatGPT can assist in customer support by answering frequently asked questions, providing troubleshooting guidance, and offering personalized recommendations. This reduces the burden on human support agents and enhances overall customer experience.

- Language Translation: ChatGPT can aid in language translation by providing accurate translations between different languages. This has significant implications for global communication and breaking down language barriers.

- Educational Assistance: Students and educators can leverage ChatGPT as a study companion. It can provide explanations, help solve problems, offer writing suggestions, and facilitate learning across various subjects.

- Creative Writing: ChatGPT’s natural language generation capabilities make it an excellent tool for creative writing. It can assist authors, poets, and screenwriters in brainstorming ideas, developing characters, and generating unique storylines.

How to Use ChatGPT?

Using ChatGPT is straightforward. Follow these steps:

- Visit the OpenAI website or platform where ChatGPT is hosted.

- Depending on the platform, you may need to sign in or create an account.

- Once logged in, you’ll typically find a prompt or text box where you can type your queries or statements.

- Type your question or statement and press enter or click the “submit” button.

- ChatGPT will process your input and respond in a conversational manner.

What are the Use Cases of GPT?

GPT models have a wide range of applications:

- Automated Customer Support: They can handle customer queries and provide 24/7 support.

- Content Creation: They are used in drafting articles, blog posts and generate creative content.

- Education: They can be used as learning aids, providing explanations on various topics.

- Translation Services: GPT models can be used to translate text between different languages.

- Personal Assistants: They can be utilized in virtual assistants to enhance their conversational abilities.

How Was GPT-3 Trained?

GPT-3 was trained using machine learning techniques called unsupervised learning. Here’s a simplified explanation:

- Initially, the model was trained with a massive corpus of text data from the internet.

- It doesn’t know the specifics about which documents were part of its training set to avoid any data privacy issues.

- The model was trained to predict the next word in a sentence, which helps it understand context and generate human-like text.

- The process involved adjusting the parameters of the model to reduce the difference between its predictions and the actual words that appeared next in its training sentences.

- This training allows GPT-3 to generate coherent and contextually relevant sentences based on the input it receives.

What can we expect from GPT-4?

With the groundbreaking advancements made in GPT-3, there is much anticipation surrounding the potential capabilities of GPT-4. We don’t have details about GPT-4 yet, but we can predict improvements and features by looking at what was done in previous versions.

- Enhanced Contextual Understanding: GPT-4 is likely to have a deeper understanding of context, enabling it to generate more coherent and contextually appropriate responses. This could result in more meaningful and engaging conversations.

- Improved Fine-tuning Abilities: GPT-4 may offer enhanced fine-tuning capabilities, allowing users to tailor the model’s responses to specific requirements more effectively. This would provide greater control over the output and make it easier to align with desired outcomes.

- Expanded Knowledge Base: GPT-4 could be trained on an even larger and more diverse dataset, incorporating a broader range of topics and domains. This would enable the model to possess a more comprehensive knowledge base, resulting in more accurate and insightful responses.

- Better Understanding of Nuances: GPT-4 might exhibit improved sensitivity to nuances in language, better understanding humor, sarcasm, and idiomatic expressions. This would make conversations with the model feel more natural and human-like.

- Reduced Bias: OpenAI has been committed to addressing bias issues in AI models. With GPT-4, we can expect further efforts to minimize biases and ensure fair and unbiased responses across various demographics.

GPT-4 may have different features than expected, as OpenAI is constantly pushing AI research boundaries. It has the potential to revolutionize AI interaction.

Applications of GPT

The remarkable ability of GPT to produce coherent, and contextually relevant text has led to its extensive use in a wide range of domains. Here are some notable applications:

- Language Translation: GPT can be used to translate text from one language to another. By providing a source language input, the model can generate high-quality translations in the target language.

- Content Generation: GPT can automatically generate human-like text, making it useful for tasks such as writing articles, product descriptions, or even creative writing.

- Chatbots and Virtual Assistants: GPT can power chatbots and virtual assistants by generating natural language responses based on user queries or inputs.

- Text Summarization: GPT can summarize long documents or articles by extracting key information and presenting it concisely.

- Question Answering: GPT can understand complex questions and provide relevant answers based on its knowledge and understanding of language patterns.

Advantages and Limitations of GPT

While GPT has proven to be a powerful model in NLP, it also has its advantages and limitations:

Advantages:

- Contextual Understanding: GPT excels at capturing contextual information within text, allowing it to generate coherent and contextually relevant responses.

- Generative Capability: GPT’s generative nature enables it to produce new content that is similar to human-generated text, making it highly valuable for content generation tasks.

- Transfer Learning: GPT’s pre-training phase enables it to learn from vast amounts of data, allowing for effective transfer learning to specific downstream tasks with limited labeled data.

Limitations:

- Lack of Common Sense Reasoning: While GPT can generate text that appears human-like, it often lacks common sense reasoning and may produce incorrect or nonsensical responses in certain situations.

- Ethical Concerns: GPT’s ability to generate highly realistic text raises concerns about misuse or potential unethical applications, such as generating fake news or deepfakes.

- Computational Resources: Training and fine-tuning large-scale GPT models requires significant computational resources, limiting their accessibility for smaller projects or individuals with limited resources.

Frequently Asked Questions about GPT (Generative Pre-trained Transformer):

GPT means Generative Pre-trained Transformer. It’s a powerful deep learning model created by OpenAI for NLP tasks.

GPT stands for Generative Pretrained Transformer, and it is the full form of GPT. It is a generative model that uses pre-training and a transformer architecture to process sequential data.

GPT works by pre-training on a large corpus of text data to develop an understanding of language patterns. It then fine-tunes specific tasks using supervised learning to adapt to real-world applications.

GPT has various applications, including language translation, content generation, chatbots, text summarization, and question answering.

GPT has advantages like understanding context, generating content, and transferring learning to specific tasks.

GPT has limitations. It lacks common sense reasoning and raises ethical concerns about misuse. It also requires significant computational resources.

Yes, GPT can translate languages. Just give it a source language, and it will generate good translations in the target language.

Yes, GPT is helpful for writing articles, product descriptions, or creative writing because it can generate human-like text.

Yes, GPT can power chatbots and virtual assistants by generating natural language responses based on user queries or inputs.

No, GPT often lacks common sense reasoning and may produce incorrect or nonsensical responses in certain situations.

Yes, there are ethical concerns regarding the potential misuse of GPT’s ability to generate highly realistic text, such as generating fake news or deepfakes.

Training and fine-tuning large-scale GPT models require significant computational resources, which can limit accessibility for smaller projects or individuals with limited resources.

Conclusion

In conclusion, GPT (Generative Pre-trained Transformer) is a powerful deep learning model that has revolutionized Natural Language Processing. Its ability to generate coherent and contextually relevant text has paved the way for numerous applications in language translation, content generation, chatbots, and more. While GPT has its advantages and limitations, it continues to drive advancements in NLP research and applications, pushing the boundaries of what machines can achieve in understanding and generating human language.